Over the past 12 months or so, I’ve watched the increasing media blitz surrounding the rise of AI generated art. All of humanity has formed into two camps. Some people argue that this is a great idea that will revolutionize the art scene. Other people believe this is an unprecedented disaster and AI art should be banned. I think they’re both wrong. In this article I’ll explain why.

First, here’s an explanation of what AI generated art is.

Deep learning algorithms such as Generative Adversarial Networks (GANs) and transformers are used to generate art by following a set of rules, but instead of using a set of rules, they “learn” a specific aesthetic by analyzing thousands of images and producing results based on that analysis.

Using the aesthetics it has learned, the algorithm tries to create new images that stick to the aesthetic, later computer systems that comprehend human language, learn from experience, make more predictions and mimic the thinking process of humans, and produce rich outputs...

In terms of artificial intelligence, the state of the art is Deep Neural Networks and Predictive Analysis, since Deep Learning models deal with items such as voice recognition and image recognition. These features give the machine an intelligent feature that can recognize objects. Computer systems can grasp the meaning of human language, learn from experience, and make predictions. Later those predictions are processed and diverse art is produced.

The 2004 Will Smith movie I, Robot has little to do with the Isaac Asimov story it’s allegedly based on, but it is, I think, an underrated commentary on the concept of artificial intelligence and how it plays on our fears. Will Smith plays a cop suffering from severe survivor’s guilt from a car wreck years ago. The accident left him and a young girl trapped in a lake and a passing android dived in to attempt a rescue. To Will Smith’s dismay, the android rescued him and left the little girl to drown. A person with a sense of humanity would have known to rescued the little girl first, but the android did the opposite, and actually had good reason for doing so. It detected two life forms at the bottom of the lake and determined that Will Smith had the best chance of survival, so rescued him.

When I first watched the movie, I sort of understood this explanation for Will Smith being upset and prejudiced toward androids, but didn’t truly understand why until very recently. Will Smith is one of the very few people in the world who understands androids for what they truly are. They aren’t cute and they’re not our friends. They’re mindless automatons incapable of emotion and act on programming alone. And yet people mindlessly accept androids into every aspect of their lives and it could all backfire catastrophically. This is all quite clear in the movie but the reason I say I didn’t understand why Will Smith acted this way is because, as a teenager, I did not yet appreciate the difference between acting out of reason and acting out of emotion. That’s also why I think I, Robot has deeper writing than it is usually given credit for. Will Smith hates androids out of emotion, not reason. He can’t coherently explain why he hates androids, and more critically, this prejudice leads him to irrational and stupid conclusions, not logical ones. For example, early in the movie he “racially profiles” an android running down the street with a purse and tackles it to the ground. In reality, the android was just racing to help a middle-aged woman who needed her inhaler. It’s logical to think a human being might want to steal a purse, but it’s stupid to think an android would. What would he even do with it? Will Smith’s irrational and sometimes violent behavior leads everyone else to think he’s a bit of a lunatic. And really, he is.

Later on, Will Smith questions an android accused of murdering the scientist who built him, and this is some of my favorite dialogue in movie history.

Will Smith: Can a robot write a symphony? Can a robot turn a canvas into a beautiful masterpiece?

Android: Can you?

It’s such a brutal burn and there’s no comeback to it. He’s absolutely right. The vast majority of people are boring and stupid, and completely uninteresting. Our skills are unimpressive and our jobs are so mindless and repetitive, we can be easily, effortlessly replaced by robots when they become advanced enough.

This scene with Will Smith is the perfect analogy for why the advances of AI generated artwork provoked such a backlash from so many people. Art was our one safe space, the one thing that separated us from the machines. Humans can be creative and use our imaginations. Machines can’t and that’s it. But wait, they can! Machines can produce art just as good if not better than ours!

And so began the android revolution. AI-generated art. A lot of people on the internet expressed panic, anger, and indignation. They pointed out some serious problems with AI-generated art. It “learns” based on the input of art created by real-life people. Isn’t this essentially plagiarism? Another commonly-cited problem is that AI art in the mainstream would effectively annihilate the commercial art industry on forums like Fiverr.

Guys, don’t panic.

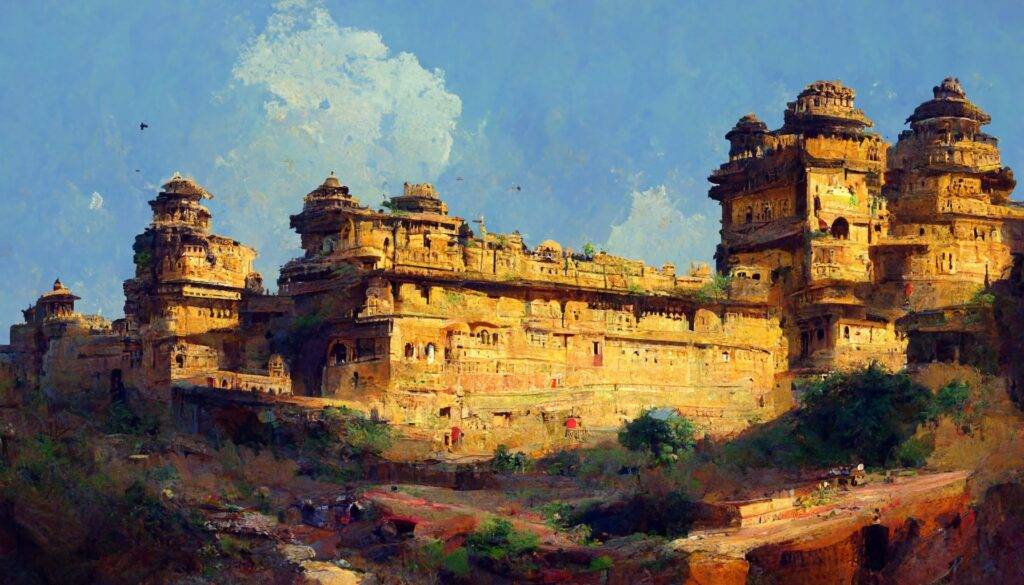

To illustrate (get it?) why we shouldn’t panic, here’s a specific case study. Today on the internet I saw a certain indie author who gleefully fired his Fiverr artist and replaced him with AI-generated cover imagery. Here’s the new AI “art” he merrily boasted about on social media:

At first glance, these images would terrify any normal person, and rightly so. They’re extremely well-textured and detailed. Equal or arguably better than even a highly skilled artist. How can mere humans compete against the machine who can create such masterpieces in a matter of seconds.

But think about it. Can a machine think? Can a machine have understanding and imagination like a real human artist can? Of course not. Now look at these images again. Actually take more than a few seconds to analyze them. If you’re still not sure what I mean, I’ll describe them one by one. Start with Image #1 on the left. The warrior’s armor and weapon seem to dissolve into the skin of the dragon. Now look at the boat. It structurally doesn’t make any sense, and the patterns on it are random gibberish. Now look at the wings of the dragon. Are those wings, or are they sails? It’s not actually clear because, again, it’s not a deliberate artistic decision, it’s random pixels vomited onto the screen by an AI that literally doesn’t know what it’s creating. All the AI is doing is attempting to mimic patterns, and usually failing at them. Pay particular attention to the dragon’s jaw too. It doesn’t structurally make sense and the perspective lines are wrong.

Image #2 is in my opinion, the best drawn of the series, but only by accident. The green girl’s arm is anatomically incorrect, and the weapons are just randomly generated rods that go nowhere in particular. Like in image #1, the boat they’re standing on has no perspective lines and doesn’t make any sense.

And this is why AI art will not go anywhere, and can’t go anywhere. AI doesn’t know what a boat is, or what a dragon is, or what a girl is. AI is not actually drawing boats, dragons, and girls. It’s producing patterns of pixels based on inputs.

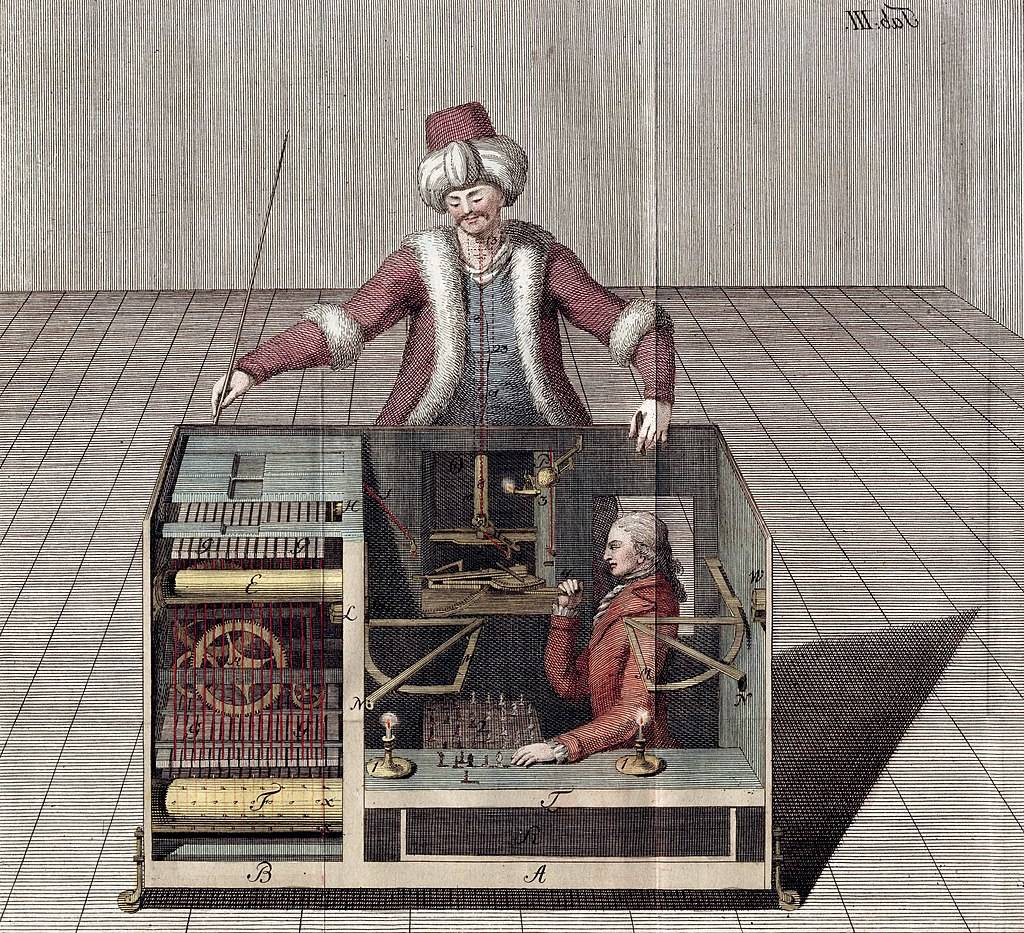

Now at this point I’m sure some readers think I’m being unfair or pretentious, but I’m not. This is not a new problem. Quite the opposite, we keep running into this problem over and over again. We set up milestones to define a successful “artificial intelligence” but then find out that intelligence was actually unnecessary for those tasks the whole time. Perhaps the first milestone humans set for AI was chess. The whimsical idea of a machine that plays chess goes back centuries, though all of the early machine turned out to be frauds. If an AI could beat a human at chess, then presumably, that AI qualifies as a thinking creature.

Imagine you have to play a game against a world-famous chess champion. That might sound impossible but you have a crucial advantage. The champion has five seconds to decide on each of his moves, but you have five years. With that much time, even if you don’t know anything about chess, you can just systematically map out every conceivable move and every conceivable counter-move for a hundred turns, then pick the best one. That’s what a machine does, and that’s also why people stopped considering chess as a good way to evaluate AI.

Chess is an abstracted analogy for war, and AI failed in real wars too. Machines can help humans pick out targets in an air war, which is mostly black and white like a chess board, but we still to this day do not have AI-controlled robot soldiers who can navigate the complexities of ground combat. Machines aren’t even infallible for air combat. Low-flying Soviet-era drones are still able soar past even the most advanced anti-air defenses, both on the NATO side and on the Russian side. Billion-dollar submarines still hilariously crash into undersea mountains. Advanced warships still collide into slow-moving civilian freighters. Tesla cars still routinely collide with other vehicles. The common problem in all these scenarios: the more complexity, nuance, and “clutter” there is, the more useless computers and AI-assistance becomes at detecting threats. This principle holds true in other fields as well. Even the most sophisticated and well-funded law enforcement agencies in the world are still laughably useless at profiling serial killers and preventing terrorist attacks. One might think that it would be possible to build the perfect computer model to flag mentally unstable and ideologically motivated people before they commit crimes, but apparently not. The perfect crime-free world enjoyed by Tom Cruise in Minority Report is still out of our reach. Sorry.

If you still think I’m being unfair, here are some “good” AI art examples from the article I quoted above.

Notice that if you try to apply perspective lines to these designs, they don’t work at all. Especially the second image. The AI was presumably trying to imitate the “Persian” aesthetic of a luxurious palace, but did so mindlessly. Imagine if you saw this room and lopsided furniture in real life, and how weird and horrifying it would be. The Winchester Mansion would look normal.

This AI image of a chainsaw is particularly revealing, and provides insights into what’s wrong with the other pictures:

Our machine competitors can generally get away with organic shapes that don’t have to rigidly comply with the rules of symmetry and perspective, but still, not really. Note how the AI doesn’t understand the straight lines of the blade. Or all those random artifacts in the neutral areas of the image. Real artists throw in some random gibberish around the subject of their paintings, and the AI tried to copy that technique, but without any understanding of why.

Some human artists like Picasso master the art of cubism and can create amazing art that does not comply to standard perspective. That’s because these artists have imagination, context, and a solid understanding of the physical world they’re creating a parody of on canvas. An AI doesn’t understand anything, so can’t master traditional perspective, cubism, or anything else. Just mimic patterns of pixels, and do it badly.

AI enthusiasts will no doubt argue that it will get better. Okay, but no. It won’t. Improvement would require understanding. Our future robot overlords will presumably be able to do this, but not this garbage we’re seeing now.

All that said, I do think AI-generated art will have some impact on commercial art, but not as much as many people think. Some unimaginative indie authors will save a few dollars by using AI-generated art, which is fine. But the big publishing houses won’t. The risk of a major book release underperforming far outweighs the benefits of saving a few hundred bucks on a decent cover artist.

What I do think AI has a high probability of doing is further driving down the cost of royalty-free images and clip art used for books, blog posts (like mine), and illustrations. Look at the generic illustrations in, say, a textbook. This is a job AI imagery would do fairly well, but it’s not a big deviation from the huge stock imagery resources that already exist.

As for contests and awards, AI art will probably end up in a similar niche as chess computers. There will be a few competitions just for them, but machine players will be banned everywhere else. And this will be an extremely easy rule to enforce. Chess competitions are exceedingly good at looking for warning signs of cheating, and usually catch them. As for AI assistance in art, there’s a precedent for catching that kind of cheating too. Consider, for example, photography competitions. Adobe Photoshop and platforms like it have ways of unnaturally altering a photograph (and some of these tools influenced AI-generated art, incidentally). But serious photography competitions are managed by people with decades of experience in the field, and can easily check if a photographer cheated in his entry. Do you think professional artists will not be able to detect AI-assistance? Photography competitions can ask entrants to provide the raw files to check for unfair editing, and the same can be done with any other form of digital art. For example, a photoshop painter could be asked to provide the psd file he used to create the image. If there are layers he clearly got from an AI tool, he’s disqualified.

I have seen some people on the internet claim that this technology will progress to the point that not only will cover artists be replaced by AI, the authors themselves will be replaced too. AI will be able to churn out pulp fiction just as good or better than a human writer. I understand the fear, and the AI-generated pulp romance novel has been a comedic trope since the 1970s, possibly earlier, but at this point it’s not something to worry about. There are two reasons to not worry.

The first reason is that commercial art and long-format, or even short-format, fiction are two different mediums. A flashy jpeg on social media only needs to sustain scrutiny for a few seconds at most. Think of the boat/dragon/girl images I showed you earlier. A typical person might not notice the flaws at first glance, but they don’t hold up any longer than that. A typical novel is 80,000-200,000 words. If it’s garbage, the reader will stop and be angry.

The second reason is related to medium as well. The idea of an AI replacing human writers and producing copy has already been thought of, and tried. I have played with some of these copy-generating AI’s before, and they’re all terrible. These models do make at least some effort to mix up the human texts they steal from, but it is still very obvious that they’re outright plagiarism. Why does AI-generated art succeed while AI-generated copy fails? Simply, there are an infinite number of ways to express colored pixels on a screen, enabling AI to plagiarize artists without being too obvious about it. English has the largest vocabulary on earth with about 172,000 words. Only some of those words come up in day-to-day normal conversations, making the pool of elements an AI has to work with even smaller. Commercial art that only needs to hold the viewer’s interest for a few seconds can get away with random bullshit that doesn’t make sense. It’s the equivalent of those Windows screensavers that 90s kids are all familiar with. But no normal person can tolerate a badly written AI text. It can’t be done. I’m not saying AI can’t write text, they just have to be capable of thinking first, which they’re currently not.

Lastly, art, regardless of medium, is generally about conveying a message. That’s another crucial reason I think major companies like publishing houses won’t ever embrace AI-generated images. Stereotypes and propaganda conveyed to us in our day-to-day lives is just too nuanced to be properly replicated by a machine. Yes, an AI can be fed political cartoons of a certain flavor, but won’t understand the why behind those artistic decisions, so won’t be able to properly convey them. Just like the robot that rescued Will Smith wouldn’t be able to understand why he was supposed to rescue the little girl first.

However, I do have some serious concerns about AI-generated art, none of which I have seen discussed online. Why did AI art suddenly become so popular, and why are news outlets peddling the value of it so hard? This is eerily similar to how crypto-currency suddenly became big. How did all of these fairly-well staffed and financed tech companies suddenly spring into existence all at once? I can’t help but think some very powerful and rich people wanted to force this industry into our lives, and they have an ulterior motive for doing so, and that motive goes beyond mere profit.

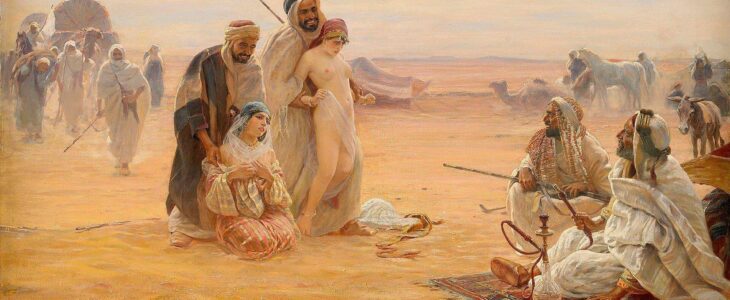

Like every big tech “innovation” over the last couple of decades, AI art is heavily controlled right out of the gate. For example, every AI art platform I’ve seen so far has explicitly stated rules (or at the very least back-end controls) against pornographic and extreme content. Which is fair and all, but this is a form of control that is implicitly accepted just by the act of using the platform. Look at how Facebook has completely altered the way we use the internet, and how even “normie” users have to carefully police themselves, because any slightly-naughty word, even in an innocent context, can get your account locked. Art has always been on the fringes of what is socially acceptable. I mean, really. Consider orientalist art pieces of soft pale-skinned virgins being thrown into the arms of cackling Arabs. Is this not just a little naughty, even?

Ian Kummer

All text in Reading Junkie posts are free to share or republish without permission, and I highly encourage my fellow bloggers to do so. Please be courteous and link back to the original.

I now have a new YouTube channel that I will use to upload videos from my travels around Russia. Expect new content there soon. Please give me a follow here.

Also feel free to connect with me on Quora (I sometimes share unique articles there).

My concern has always been that AI-generated anything makes ppl as dumb as AI, ppl get lazy as observers and thinkers, so it’s not the AI that becomes smarter, it’s humans who become dumb, rigid, unable of analysis or synthesis. And, your idea of smb being behind it seems right. It’s very likely to be part of the control machine.

Btw, I absolutely hate all machine translation algorithms…

I feel the whole debate misses the point more often than not. AI is a tool just like automatic assembly lines, it puts together what you program it for out of things it’s programmed to take in. People who argue it’s going to make humans obsolete never studied technology. People who argue it’s useless aren’t much better off.

What it’s great for is brainstorming concept art based on preexisting things, and for being a step in the overall creative process of a human. I know an artist who uses it to visualize ideas and basic backgrounds on which he then paints his actual works that aren’t tracing anything in the generated image. His every post gets spammed by accusations of him stealing everything because AI generated a background – even though he posts timelapses of his progress too and it’s clear he did it by hand after the inital step.

So, no, AI isn’t ever going to replace human creative work, that much isn’t even in dispute by anyone with a functioning brain and some life experience. People who try to rely on it alone are delusional. But that doesn’t mean it’s a useless gimmick that must be abandoned or is dangerous for some reason. AI pieces unmodified are trippy amalgams, but a human can then go on and edit them and turn them into actual art, with it given meaning by the edits of the person doing it.

As for why corporations love it, well, it’s not sinister so much as it is economical, whether in a delusional way or not. Some of them may believe the above, that they can get away with not employing human artists anymore (and are now in line for a reality check). But others recognize that it can ease some parts of the artistic process. In particular in “generic” art where it’s not art so much as it is a space-filler, as an early phase for drawing actual images, and with great potential for assisting animation. Again, in all these cases it’s not a replacement for humans, but a tool in the hands of one or several – not unlike a programmed machining table and its operator. Just as with heavy industries, it has the potential to speed up some aspects of production and increase potential output quite a bit without necessarily dropping the quality of the final product.

Obviously people who try to claim purely generated pictures as their own selfmade art are scammers. But that doesn’t mean the tool is not legitimately useful for other purposes.

I tried that network in Discord.

1. It produced amazing styling overall

2. It has some vague understanding of analogues on the level of 5yo kid. Like wheels being machine’s legs.

I asked for “threelegged bus” and was expecting some mix of Totoaro and Wells’ martians. Got some more or less buss-like shapes whith 3 axles.

3. It has zero appreciation of internal structure. Limbs would often end in a small clous of meast. Of the aforementioned buses most were brick-like yet one was Г-shaped like those Esher’s and Rutersvaardvs absurdist paintings.

4. It totally can not Google for unknown terms or use GPT-based speech recognition (like that AI Dungeon ‘game’/’novel’) to get “meaning” and connections between words.

It is merely statistic thing. Each keyword activate few of concepts, meaning increasingly their probabilities, those give boosts and penalties to other probabilities of smaller concepts, etc.

When drawing sequence happens luckily – with have very plausible dog heads.

With less lucky runs the dog would have three eyes or nostrills seeded already, before two of them can drive the probability of more eyes down. Uncanny valley is not even the word for it.

All in all, neuronets are already good to make miniatures, where small details would not be seen, but vague “feeling” matters. Already good there.

Maybe they would do better with capturing meaning in the coming years.

I also think the absurdist painters like Dali would fall first. The natural naive innocence of neuronets seem to generate terrifying abominations much easily than human painters conscious attempts at controlled insanity

I am too lazy to upload and annotate my own few layman experiments, but here are some artists who tried it for real.

I have to admit, their pictures ar emuch better than what i saw happening on “free 50” runs some months ago. Maybe commercial service gave them more control, or the net actually learned a lot since then.

Artist: https://author.today/post/324774

Publisher? https://author.today/post/305420

i’d say the two camps are the same ones we’ve had for several centuries: idealists vs materialists. the majority of “boring, uninteresting” people fall into the latter hence their “boringness”. this leads to the further division between “art” and “entertainment”. AI might be able to write a catchy top 40 pop song but it will never write something with the lyrical depth of tom waits (annoying voice and music but i’ll admit a great lyricist) or rozz williams and (despite how mechanical they already sound) never reach the musical complexity and strangeness of an aphex twin or autechre.

art is not science. only dumb f_cks susceptible to scientism think it can be. ditto thought in general.

p.s. odd to mention i, robot but not blade runner. i’ve…seen…things…

Notorious K.I.M. https://twitter.com/qiovan/status/1601267653431414784

The whole article reeks of artist justifying how special and different humans are to AI. Unless you are religious or spiritual and believe in some form of soul (or similar) then our brains are just very advanced computers. Ask a baby to paint and it will splurge its hands in paint and smear it randomly on the paper (if it could even understand your request) teach that baby from childhood for 30 years how paint, and by the time its an adult it will indeed be able to produce wonderful things. That all that’s happening here, AI art is in its infancy still, give it time it will equal what most humans can do. What is going to be harder to help it understand is emotion, as computers currently don’t understand that concept. As humans we barely understand what that is or how our brains work. All that said in the meantime while it learns and catches up (look at Midjourneys different version from 1 to 4 the difference is staggering in a short space of time) it remains a wonderful tool for ND people who find putting their thoughts into pictures difficult, and as a tool in the artistic process its also a great asset. Where 20 years ago we didn’t have digital art programs now we do, they all contain elements of AI art. Is animation not art? Animation is nearly all AI assisted these days. It reeks of gatekeeping to be dictating what constitutes art or even good art.